Kuryr and OVN Integration

10 Oct 2015If you are not familiar with project Kuryr, you can read this introduction blog post before continuing. In this post i will show you the basic results of our progress thanks to a recent virtual sprint the project had, in this post we will see how thanks to Kuryr, docker libnetwork API is mapped to Neutron API and with OVN as the Neutron plugin backend, containers networking is established.

It is important to note that any other Neutron implementation could have also been used here instead for example the reference OVS implementation, Midonet or others.

As i write these lines Docker decided to change their libnetwork API yet again, but i think this is still relevant because it shows the concept itself rather then the details which we already work on changing in project Kuryr. You should note that some of the code presented here is still in review process and not merged yet, we plan to have this work by OpenStack Tokyo summit and i will then write another “Getting Started with Kuryr” post, describing the steps needed to reproduce everything showed here.

Steps for Installing Kuryr + OVN

1) Have a single node devstack installation with OVN running. This of course doesnt have to be OVN, Kuryr is transparent to the Neutron backend and only uses official Neutron and Neutron services APIs.

2) Clone project Kuryr git repository and install it and its dependencies.

3) Export the following environment variables for Kuryr to authenticate with Neutron API as admin

export SERVICE_USER=admin

export SERVICE_PASSWORD=password

export SERVICE_TENANT_NAME=admin

export SERVICE_TOKEN=password

export IDENTITY_URL=http://127.0.0.1:5000/v2.0

Change the values according to your devstack configuration.

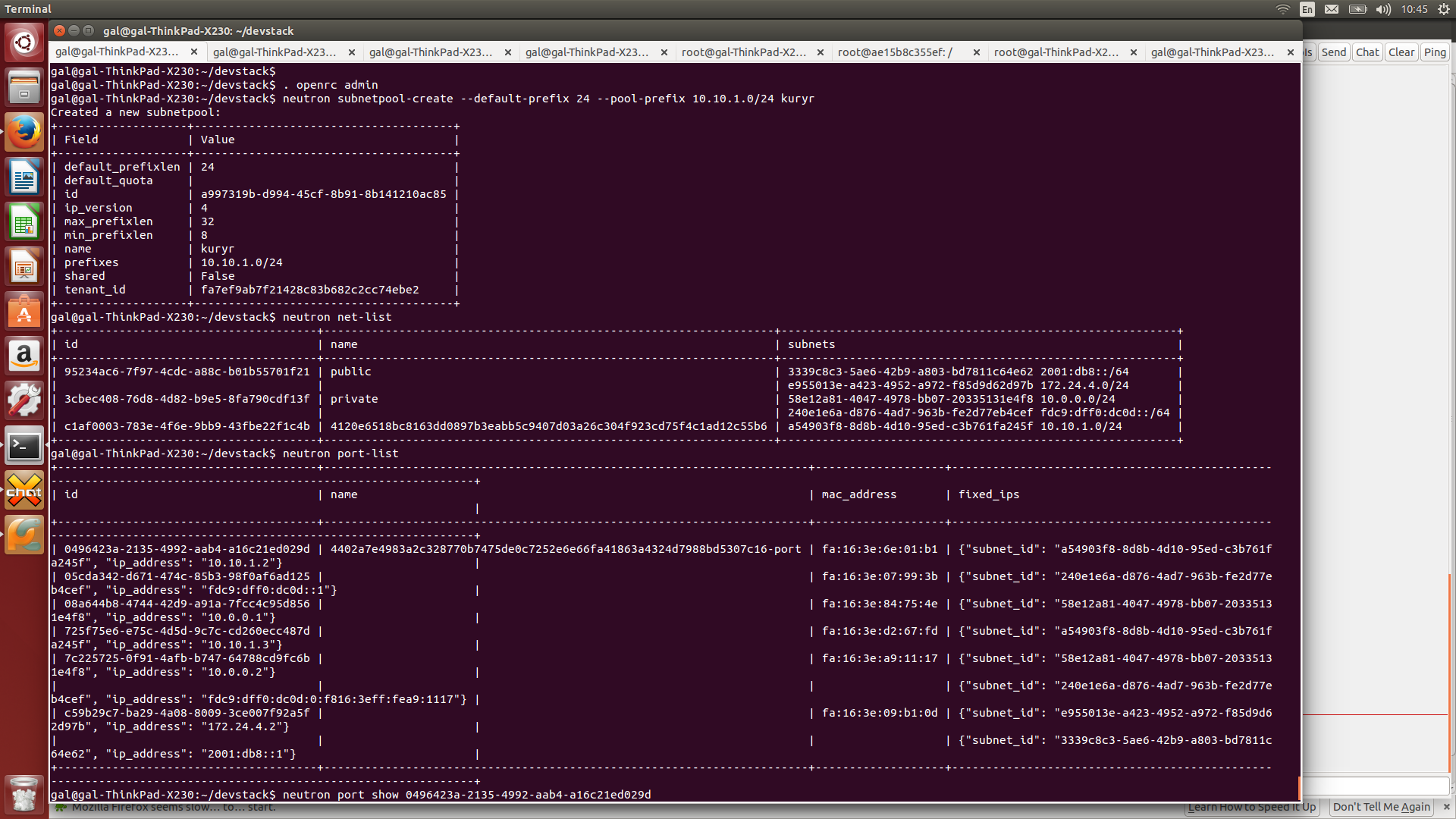

4) Create a subnetpool with name kuryr, this is a temporary solution until we support IPAM within Kuryr

neutron subnetpool-create --default-prefix 24 --pool-prefix 10.10.1.0/24 kuryr

5) Run Kuryr server as root (We are currently working on leveraging CAP_NET_ADMIN capabilities in order to possibly avoid having to use root/rootwrap in here)

cd kuryr

./scripts/run_kuryr.sh

What happens now is that Kuryr server should run and is auto discoverable by Docker. You must have Docker experimental branch installed (supports libnetwork remote drivers).

Creating Docker network and a service

right now Kuryr is binded as a remote driver to Docker with OVN as its networking backend. We can start issuing Docker CLI commands and see them mapped into Neutron API and implemented by OVN.

First lets create a network named test

gal@gal-ThinkPad-X230:~$ sudo docker network create -d kuryr test

4120e6518bc8163dd0897b3eabb5c9407d03a26c304f923cd75f4c1ad12c55b6

The result of this command is the docker id for the created network, we currently use this id in Kuryr as a name to create the Neutron network. I am currently proposing a Neutron extension that will support adding tags to Neutron resources. With this feature we could hopefully use the same use friendly name and add this ID as a tag. (We also need a way to receive the used docker name in Kuryr, currently this information is not being pass to the remote driver implementation).

We also plan to use the tags feature to support pre-allocation of networks/subnets and ports in Neutron and attach them on demand to containers.

If you noticed the above command had the -d kuryr syntax in it, this tells docker to use

the Kuryr libnetwork remote driver.

Next step will be to publish the service foo

gal@gal-ThinkPad-X230:~$ sudo docker service publish foo.test

4402a7e4983a2c328770b7475de0c7252e6e66fa41863a4324d7988bd5307c16

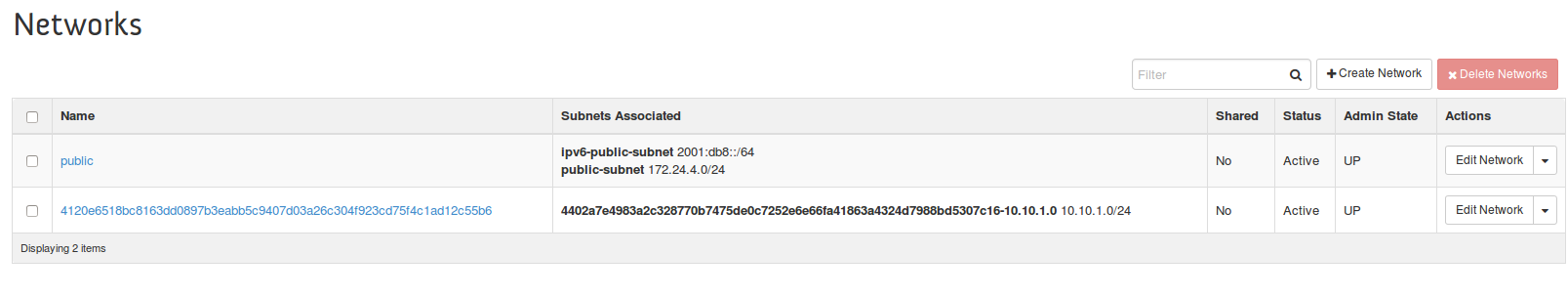

If we now look at Horizon UI, we can see the network and subnet are added to Neutron

We can see the added network with the Docker id as its name and the subnet attached to it. This docker API maps to the end point creation hook and triggers a relevant neutron port creation. Kuryr doesnt need to re-create the subnet every time a new end point is added but only at the first time and reuse the same one in consecutive calls.

Running a container and attaching it to the service

The next step will be to run a container and attach this container to the service we just created. This should allocate an IP to the container using Neutron IPAM and bind the container namespace to the “networking infrastructure”, in our case OVS bridge.

Starting the container

gal@gal-ThinkPad-X230:~$ sudo docker run --rm --net=none -it ubuntu /bin/bash

root@ae15b8c355ef:/#

And then attaching this container to the created service

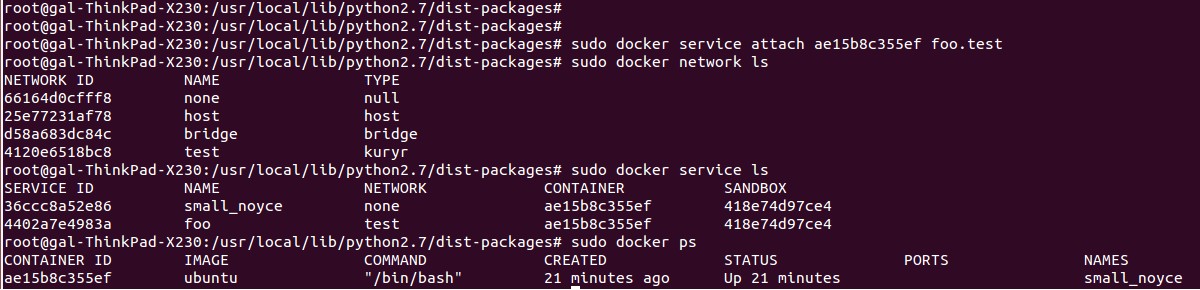

What happens here is that the “Join” API is called for Kuryr libnetwork remote driver and what it does is bind between the container namespace external vEth pair and the OVS bridge. (at this point the Neutron port is already created).

In order to do that Kuryr has a generic VIF binding framework which knows (by configuration) to call the appropriate script for the binding depends on which Neutron backend is configured. (This is simulating the same thing that Nova does when creating a VM on a specific host).

We can see from the screenshot that the service is attached to the network correctly, and we can see that the port and network are also added to Neutron using the Neutron CLI.

Container port in OVN

If we look at the container interfaces, we can see that we have an eth0 interface with an IP allocated by Neutron

root@ae15b8c355ef:/# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

44: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:6e:01:b1 brd ff:ff:ff:ff:ff:ff

inet 10.10.1.2/24 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe6e:1b1/64 scope link

valid_lft forever preferred_lft forever

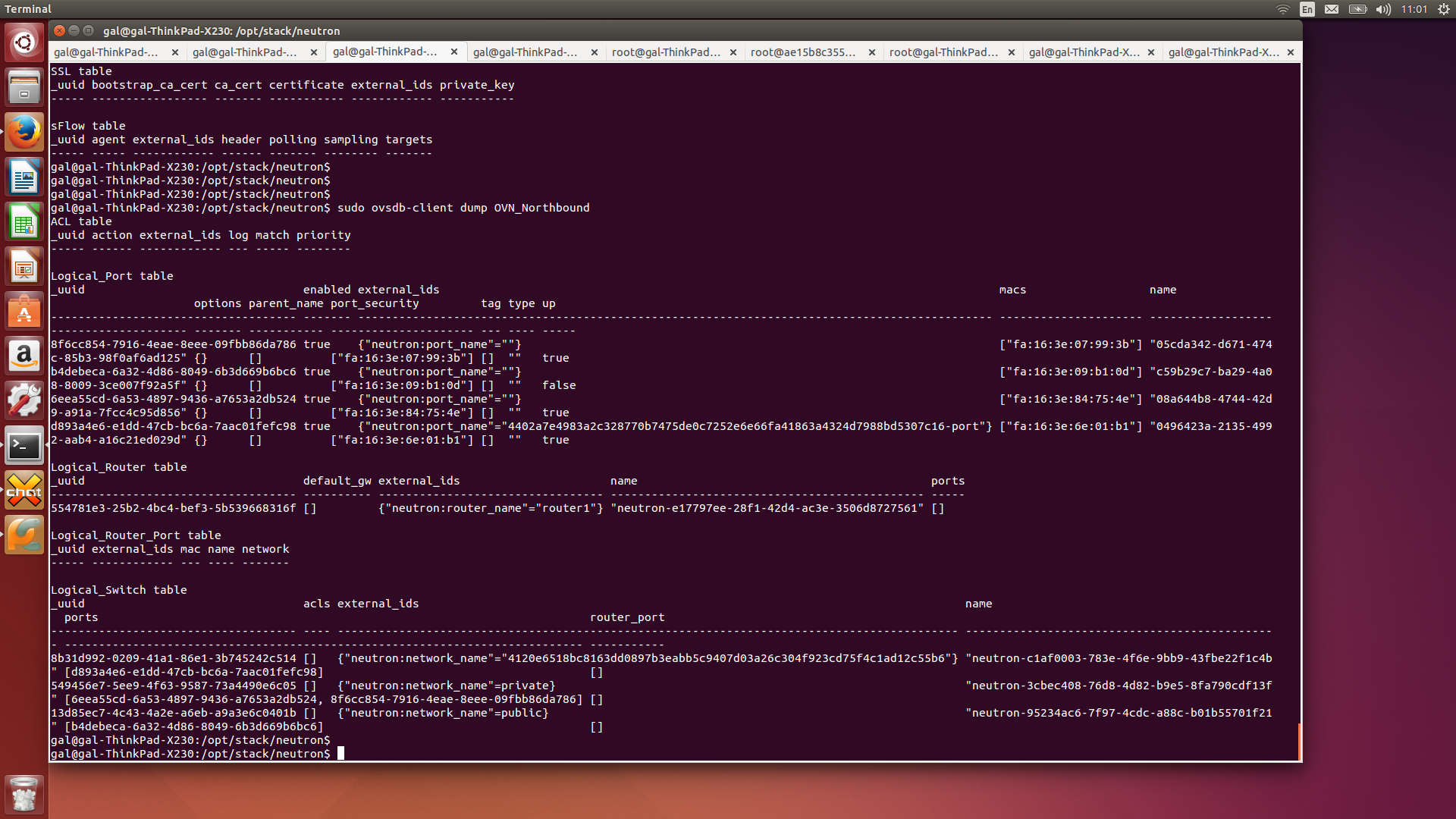

What happens when we attach the container to the service is that Kuryr calls the OVS Binding script and this adds the external part of the container veth pair to br-int and also sets an external id for that interface with the same port id as Neutron port id.

This process simulate what is done by Nova for a VM, OVN controller now notice the added port and knows to link it to the correct Logical port based on the Neutron port id (stored in the external id). This is a similar operation that is happening in pure Neutron with OVN and OVS L2 Agent.

The following screenshot shows OVN Northbound DB as its populated with the added port and network.

Summary

What i showed you in this blog post is just the basic first milestone of Kuryr, i choose OVN as the Neutron backend for this example but this should also work the same with Midonet, OVS L2 agent or any other Neutron plugin. As the Docker libnetwork API changed we are working to adjust Kuryr to the new changes.

We have a very interesting roadmap a head of us, mostly integrating with Docker Swarm for multi node setups, tackling the common use case of nested containers in a VM (and integration with OpenStack Magnum), And leveraging Neutron features and services for containers networking.

If you are interested in the project, we welcome anyone to join us in this effort. You can keep track of Kuryr Trello board for our progress or read Kuryr Neutron spec to understand the road ahead of us.

Tags: Docker · Kuryr · Midonet · OVN · OVS · OpenStack ← Previous Post: Kuryr - Bringing Containers Networking to OpenStack Neutron Next Post: Dragonflow for Liberty →